Difference between revisions of "Cyberinfrastructure"

From Montana Tech High Performance Computing

| (24 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== HPC Architecture == | == HPC Architecture == | ||

| − | The Montana Tech HPC cluster contains 1 management node, | + | The Montana Tech HPC (oredigger cluster) contains 1 management node, 26 compute nodes, and a total of 91 TB NFS storage systems. There is an additional computing server (copper). |

| − | + | Twenty-two compute nodes contain two 8-core Intel Xeon 2.2 GHz Processors (E5-2660) and either 64 or 128 GB of memory. Two of these nodes are [[GPU Nodes]], with three NVIDIA Tesla K20 accelerators and 128 GB of memory. Hyperthreading is enabled, so 704 threads can run simultaneously on just the XEON CPUs. The remaining four nodes feature the Intel 2nd Generation Xeon Scalable Processors (48 CPU Cores and 192 GB Ram per node). Internally, a 40 Gbps InfiniBand (IB) network interconnects the nodes and the [[storage]] system. | |

| − | The system has a theoretical peak performance of | + | The system has a theoretical peak performance of 14.2 TFLOPS without the GPUs. The GPUs alone have a theoretical peak performance of 7.0 TFLOPS for double precision floating point operations. So the entire cluster has a theoretical peak performance of over 21 TFLOPS. |

| − | The operating system is | + | The operating system is Rocky Linux 8.6 and Warewulf is used to maintain and provision the compute nodes (stateless). |

| Line 13: | Line 13: | ||

|+<span style="color:#925223"> Head Node </span> | |+<span style="color:#925223"> Head Node </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 8-cores) | + | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 2x 8-cores) |

|- | |- | ||

| '''RAM'''|| 64 GB | | '''RAM'''|| 64 GB | ||

|- | |- | ||

| − | | '''Disk''' || | + | | '''Disk''' || 1 TB SSD |

|} | |} | ||

</div> | </div> | ||

| Line 24: | Line 24: | ||

|+<span style="color:#925223"> Copper Server </span> | |+<span style="color:#925223"> Copper Server </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5-2643 v3 (3.4 GHz, 6-cores) | + | | '''CPU'''|| Dual E5-2643 v3 (3.4 GHz, 2x 6-cores) |

|- | |- | ||

| '''RAM'''|| 128 GB | | '''RAM'''|| 128 GB | ||

| Line 45: | Line 45: | ||

</div> | </div> | ||

<div class="large-3 columns"> | <div class="large-3 columns"> | ||

| + | |||

</div> | </div> | ||

| Line 50: | Line 51: | ||

<div class="row"> | <div class="row"> | ||

| + | <div class="large-3 columns"> | ||

| + | {| | ||

| + | |+<span style="color:#925223"> 4 Compute Nodes (NEW) </span> | ||

| + | |- | ||

| + | | '''CPU'''|| Dual Xeon Platinum 8260 (2.40 GHz, 2x 24-cores) | ||

| + | |- | ||

| + | | '''RAM'''|| 192 GB | ||

| + | |- | ||

| + | | '''Disk''' || 256 GB SSD | ||

| + | |- | ||

| + | | '''Nodes''' || cn31~cn34 | ||

| + | |} | ||

| + | </div> | ||

<div class="large-3 columns"> | <div class="large-3 columns"> | ||

{| | {| | ||

|+<span style="color:#925223"> 14 Compute Nodes </span> | |+<span style="color:#925223"> 14 Compute Nodes </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 8-cores) | + | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 2x 8-cores) |

|- | |- | ||

| '''RAM'''|| 64 GB | | '''RAM'''|| 64 GB | ||

| Line 60: | Line 74: | ||

| '''Disk''' || 450 GB | | '''Disk''' || 450 GB | ||

|- | |- | ||

| − | | '''Nodes''' || | + | | '''Nodes''' || cn0~cn11, cn13, cn14 |

|} | |} | ||

</div> | </div> | ||

| Line 67: | Line 81: | ||

|+<span style="color:#925223"> 6 Compute Nodes </span> | |+<span style="color:#925223"> 6 Compute Nodes </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 8-cores) | + | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 2x 8-cores) |

|- | |- | ||

| '''RAM'''|| 128 GB | | '''RAM'''|| 128 GB | ||

| Line 73: | Line 87: | ||

| '''Disk''' || 450 GB | | '''Disk''' || 450 GB | ||

|- | |- | ||

| − | | '''Nodes''' || | + | | '''Nodes''' || cn12, cn15~cn19 |

|} | |} | ||

</div> | </div> | ||

| + | |||

| + | <div class="large-3 columns"> | ||

| + | [[File:Cluster2.jpg|280px|"maintain"]] | ||

| + | </div> | ||

| + | |||

| + | |||

| + | |||

| + | </div> | ||

| + | |||

| + | <div class="row"> | ||

<div class="large-3 columns"> | <div class="large-3 columns"> | ||

{| | {| | ||

|+<span style="color:#925223"> 2 GPU Nodes </span> | |+<span style="color:#925223"> 2 GPU Nodes </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 8-cores) | + | | '''CPU'''|| Dual E5-2660 (2.2 GHz, 2x 8-cores) |

|- | |- | ||

| '''RAM'''|| 128 GB | | '''RAM'''|| 128 GB | ||

| Line 88: | Line 112: | ||

| '''GPU''' || Three nVidia Tesla K20 | | '''GPU''' || Three nVidia Tesla K20 | ||

|- | |- | ||

| − | | '''Nodes''' || | + | | '''Nodes''' || cn20, cn21 |

|} | |} | ||

</div> | </div> | ||

<div class="large-3 columns"> | <div class="large-3 columns"> | ||

| + | |||

</div> | </div> | ||

| + | <div class="large-3 columns"> | ||

| + | </div> | ||

| + | <div class="large-3 columns"> | ||

| + | </div> | ||

</div> | </div> | ||

| Line 100: | Line 129: | ||

== 3D Visualization System == | == 3D Visualization System == | ||

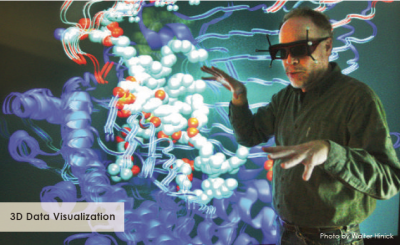

Montana Tech is developing two 3D data visualization systems. Both systems provide an immersive visualization experience (aka virtual reality) through 3D stereoscopic imagery and user tracking systems. These systems allow scientists to directly interact with their data and helps them gain a better understanding of their data generated modeling on the HPC Cluster or collected in the field. Remote data visualization is possible by running [[VisIt]] from the cluster's login node. | Montana Tech is developing two 3D data visualization systems. Both systems provide an immersive visualization experience (aka virtual reality) through 3D stereoscopic imagery and user tracking systems. These systems allow scientists to directly interact with their data and helps them gain a better understanding of their data generated modeling on the HPC Cluster or collected in the field. Remote data visualization is possible by running [[VisIt]] from the cluster's login node. | ||

| − | + | <div class="row"> | |

| − | {| style="width: | + | <div class="large-6 columns"> |

| + | {| style="width: 80%" | ||

|+<span style="color:#925223"> Windows Immersive 3D Visualization System </span> | |+<span style="color:#925223"> Windows Immersive 3D Visualization System </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5- | + | | '''CPU'''|| Dual E5-2643 v4 (3.4 GHz, 2x 6-cores) |

|- | |- | ||

| '''RAM'''|| 64 GB | | '''RAM'''|| 64 GB | ||

|- | |- | ||

| − | | '''Disk''' || | + | | '''Disk''' || 512 GB SSD + 1TB HD |

| + | |- | ||

| + | | '''GPU''' || Dual nVidia Quadro K5000 | ||

|- | |- | ||

| '''OS''' || Windows 7 | | '''OS''' || Windows 7 | ||

|- | |- | ||

| − | | '''Display''' || 108" projector screen | + | | '''Display''' || 108" 3D projector screen |

|- | |- | ||

| '''Tracking''' || ART SMARTTRACK | | '''Tracking''' || ART SMARTTRACK | ||

|} | |} | ||

| + | </div> | ||

| + | <div class="large-6 columns">[[File:Viz.PNG|400px]] | ||

| + | </div> | ||

| + | </div> | ||

| − | {| style="width: | + | <div class="row"> |

| + | <div class="large-6 columns"> | ||

| + | {| style="width: 80%" | ||

|+<span style="color:#925223"> Linux IQ Station </span> | |+<span style="color:#925223"> Linux IQ Station </span> | ||

|- | |- | ||

| − | | '''CPU'''|| Dual E5- | + | | '''CPU'''|| Dual E5-2670 (2.60 GHz, 8-cores) |

|- | |- | ||

| '''RAM'''|| 128 GB | | '''RAM'''|| 128 GB | ||

|- | |- | ||

| − | | '''Disk''' || | + | | '''Disk''' || 4 TB |

| + | |- | ||

| + | | '''GPU''' || Dual nVidia Quadro 5000 | ||

|- | |- | ||

| '''OS''' || CentOS 6.4 | | '''OS''' || CentOS 6.4 | ||

| Line 132: | Line 172: | ||

| '''Tracking''' || ART SMARTTRACK | | '''Tracking''' || ART SMARTTRACK | ||

|} | |} | ||

| − | = | + | </div> |

| + | <div class="large-6 columns">[[File:Viz1.jpg|400px]] | ||

| + | </div> | ||

| + | </div> | ||

Latest revision as of 12:48, 25 April 2023

HPC Architecture

The Montana Tech HPC (oredigger cluster) contains 1 management node, 26 compute nodes, and a total of 91 TB NFS storage systems. There is an additional computing server (copper). Twenty-two compute nodes contain two 8-core Intel Xeon 2.2 GHz Processors (E5-2660) and either 64 or 128 GB of memory. Two of these nodes are GPU Nodes, with three NVIDIA Tesla K20 accelerators and 128 GB of memory. Hyperthreading is enabled, so 704 threads can run simultaneously on just the XEON CPUs. The remaining four nodes feature the Intel 2nd Generation Xeon Scalable Processors (48 CPU Cores and 192 GB Ram per node). Internally, a 40 Gbps InfiniBand (IB) network interconnects the nodes and the storage system.

The system has a theoretical peak performance of 14.2 TFLOPS without the GPUs. The GPUs alone have a theoretical peak performance of 7.0 TFLOPS for double precision floating point operations. So the entire cluster has a theoretical peak performance of over 21 TFLOPS.

The operating system is Rocky Linux 8.6 and Warewulf is used to maintain and provision the compute nodes (stateless).

| CPU | Dual E5-2660 (2.2 GHz, 2x 8-cores) |

| RAM | 64 GB |

| Disk | 1 TB SSD |

| CPU | Dual E5-2643 v3 (3.4 GHz, 2x 6-cores) |

| RAM | 128 GB |

| Disk | 1 TB |

| NFS storage | nfs0 - 25 TB |

| nfs1 - 66 TB | |

| Network | Ethernet |

| 40 Gbps InfiniBand |

| CPU | Dual Xeon Platinum 8260 (2.40 GHz, 2x 24-cores) |

| RAM | 192 GB |

| Disk | 256 GB SSD |

| Nodes | cn31~cn34 |

| CPU | Dual E5-2660 (2.2 GHz, 2x 8-cores) |

| RAM | 64 GB |

| Disk | 450 GB |

| Nodes | cn0~cn11, cn13, cn14 |

| CPU | Dual E5-2660 (2.2 GHz, 2x 8-cores) |

| RAM | 128 GB |

| Disk | 450 GB |

| Nodes | cn12, cn15~cn19 |

| CPU | Dual E5-2660 (2.2 GHz, 2x 8-cores) |

| RAM | 128 GB |

| Disk | 450 GB |

| GPU | Three nVidia Tesla K20 |

| Nodes | cn20, cn21 |

3D Visualization System

Montana Tech is developing two 3D data visualization systems. Both systems provide an immersive visualization experience (aka virtual reality) through 3D stereoscopic imagery and user tracking systems. These systems allow scientists to directly interact with their data and helps them gain a better understanding of their data generated modeling on the HPC Cluster or collected in the field. Remote data visualization is possible by running VisIt from the cluster's login node.

| CPU | Dual E5-2643 v4 (3.4 GHz, 2x 6-cores) |

| RAM | 64 GB |

| Disk | 512 GB SSD + 1TB HD |

| GPU | Dual nVidia Quadro K5000 |

| OS | Windows 7 |

| Display | 108" 3D projector screen |

| Tracking | ART SMARTTRACK |